Third-party open source dependencies are nowadays

fundamental in modern software development. No one would imagine writing an XML file parser or implement their own HTTP client when popular and tested community developed alternatives are easily accessible.

Nevertheless, adoption and usage of third-party dependencies comes with some caveats, libraries can:

- suffer from vulnerabilities, which lead to potential exploitation.

- be associated with restrictive licenses, exposing software products to legal risks.

- lack support from the community, compromising the future-proofness of the code using such dependencies.

Therefore, the importance and relevance of managing third-party open source dependencies is paramount, and continuous monitoring is necessary to keep risks under control.

At

Software Improvement Group (SIG), we help organizations build and maintain high-quality software. We proudly own the world's largest software metrics data warehouse, with more than 200 billion lines of code analyzed in the 20+ years since we've been around. This is also true for software composition analysis that target third-party open source dependencies with SIG Open Source Health.

When we launched our

Open Source Health proposition in our

Sigrid® platform a few years back, we did not anticipate its success. In May 2024 alone, we analyzed approximately 15 million total open source dependencies, conducting more than 100,000 unique analyses for hundreds of customers. These numbers keep increasing week over week, so by the time you come around this write-up, I am sure we're way over the aforementioned figures.

Nevertheless, this specific feature also poses some interesting challenges and opportunities.

Addressing software composition analysis challenges and opportunities

The resulting outcome of an Open Source Health assessment has historically been a fact-based view on:

Vulnerabilities

Vulnerable dependencies can lead to severe consequences, such as data breaches, or compromised system functionality.

Freshness

Out-of-date dependencies and lack of continuous dependency refresh might lead to risks not only related to the security of a software system (older dependencies are oftentimes vulnerable, for example), but also to maintenance.

License use

Dependencies with restrictive licenses may conflict with end-users, distribution methods, or the business model. Failure to comply with licensing terms can lead to legal repercussions.

Activity

As dependencies who lack support from the community, thus maintenance, may never get known vulnerabilities addressed, and compatibility issues may arise with natural software evolution.

Stability

For production of code, stable dependencies are better options with respect to beta or pre-release versions, as they could be buggy or not yet thoroughly tested.

Management

Utilizing package managers streamlines most of the operations around third-party dependencies, as they typically offer an efficient, centralized version set-up, simplify dependency resolution, and reduce risks of compatibility.

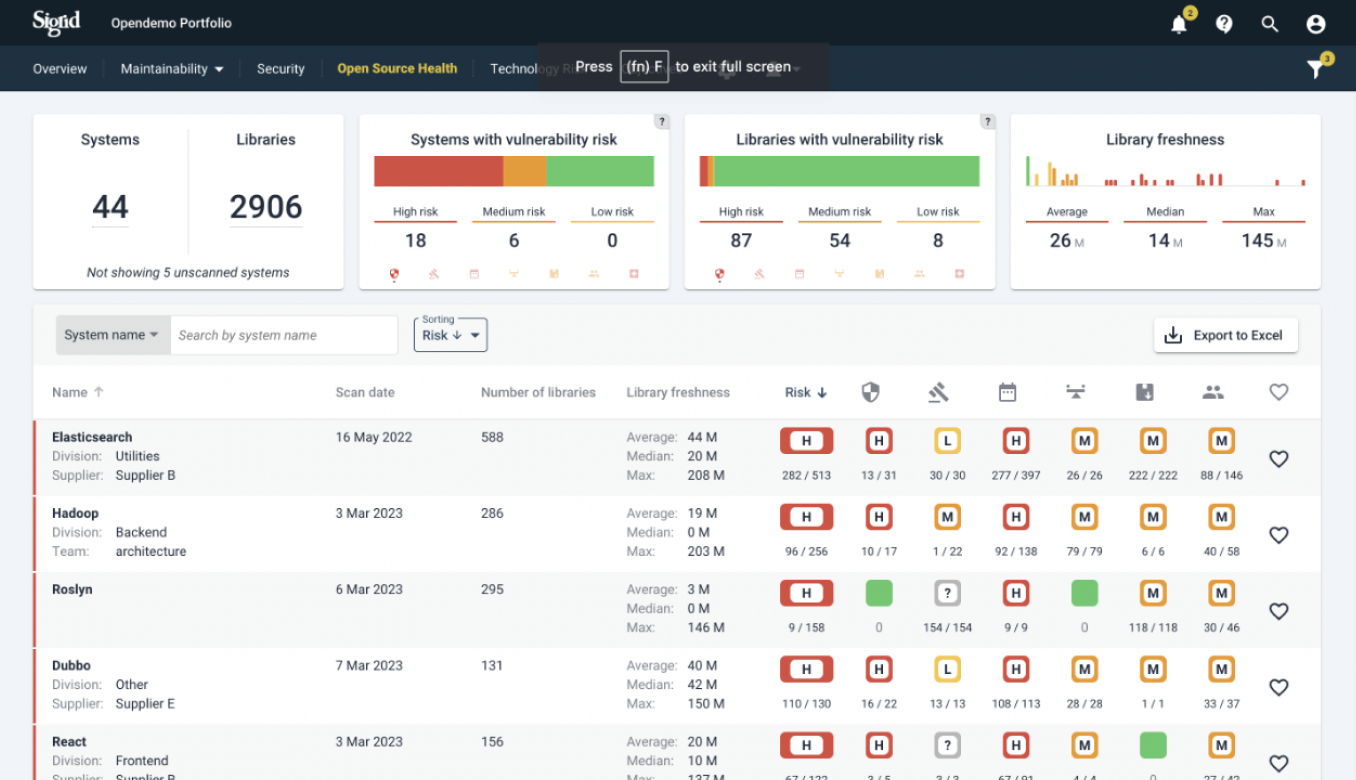

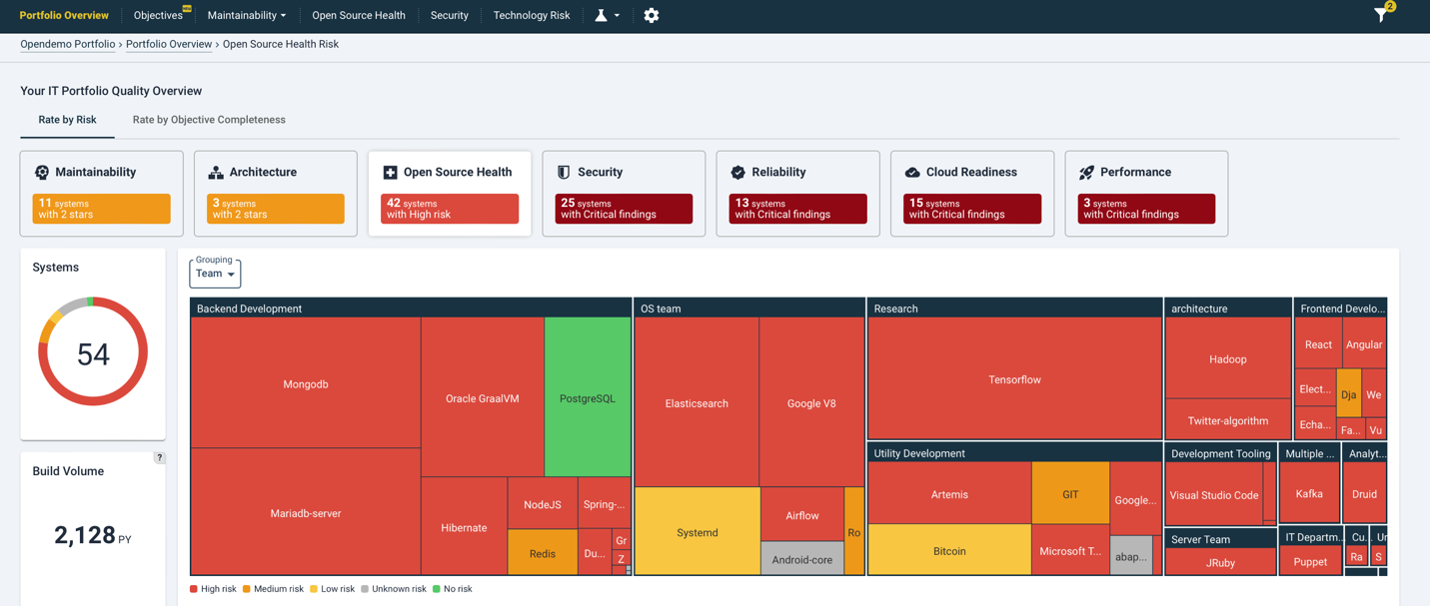

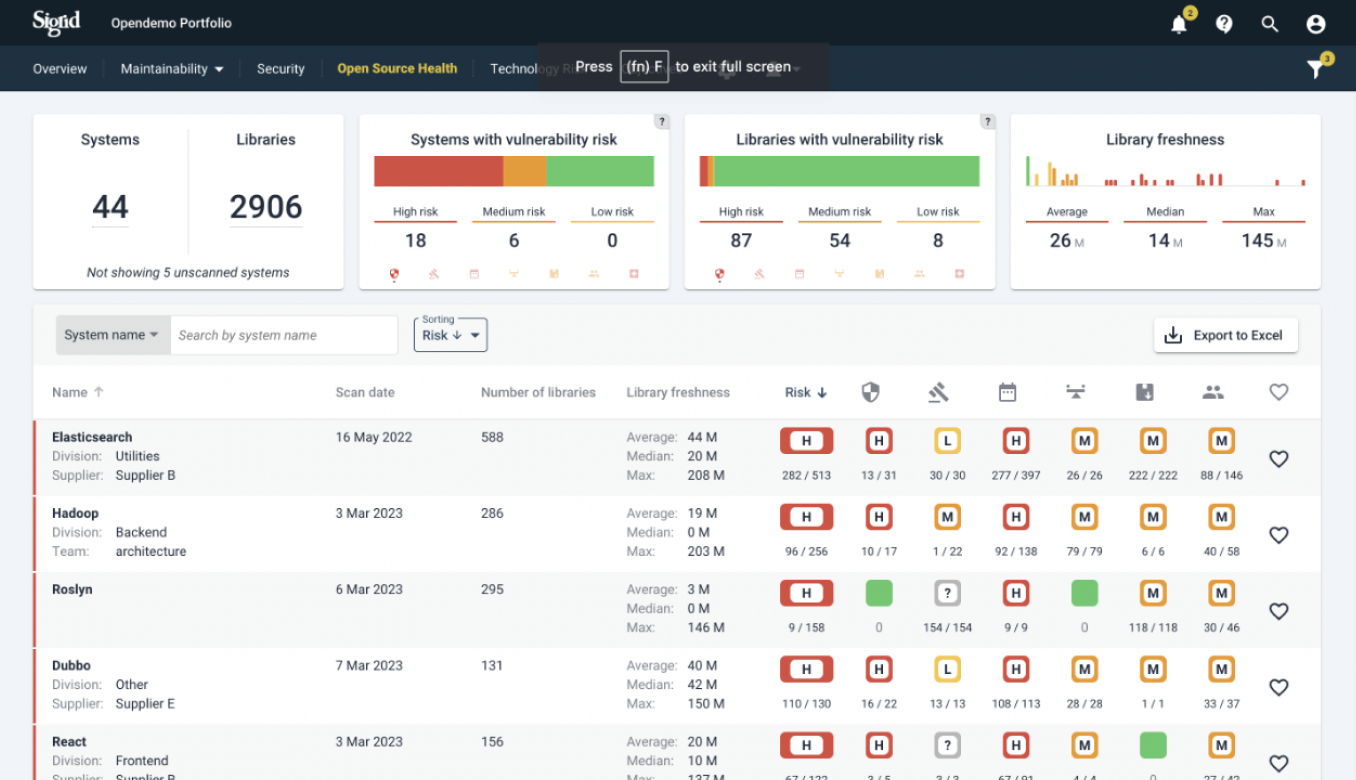

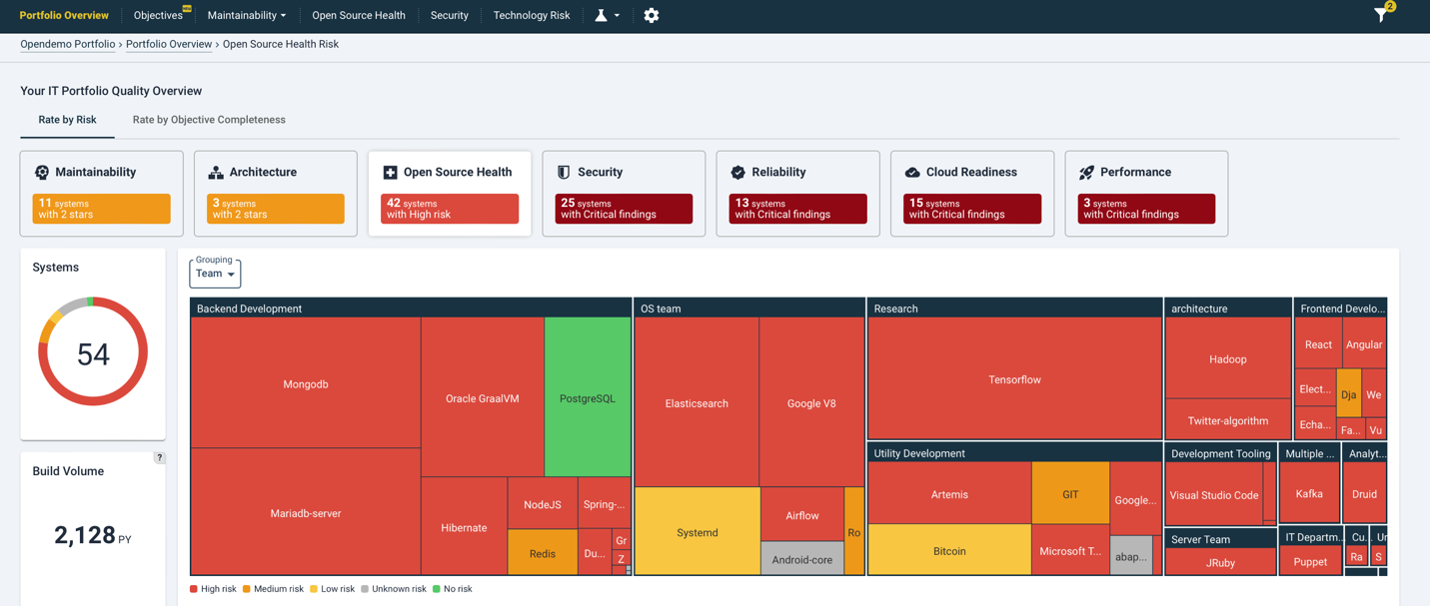

The Open Source Health assessment reported opinionated risks based on a scale from low to high, and aggregated results in our Sigrid platform, showing the highest risk associated with a system's open source dependencies.

Sigrid portfolio overview for Open Source Health with risk-based measurements.

Overall, the customer feedback received on Open Source Health findings has always been very positive on system-level findings, for which SIG reports risks associated with a single software product, and provides indication on how to prioritize dependency upkeep.

On the other hand, there was still room for improvement.

For example, when looking at a portfolio of, say, 50 or more applications, IT managers, CISOs and Software Architects provided feedback asking if the findings can be made more actionable and balanced.

The portfolio overview reported the vast majority of the applications in it with severe risks, as even few out-of-date third-party dependencies would categorize the entire system as in need of rather urgent care. Furthermore, the way in which findings were reported didn't allow for a comparison with the market.

This left a bitter taste in our mouths as well because you couldn't really make a data-driven informed decision at first glance as to where a consultant or a customer should start tackling a portfolio from this perspective.

That's where this clicked for us.

Why can't we reuse our benchmarked-based approach to measure software maintainability [1,2,3], and apply it to open source dependencies measurements? Can we make it a Quality Model? This would prove invaluable in large portfolios of applications, where risks are present, yet not everything requires immediate care.

Our new Open Source Health Quality Model

Over about one and half years' worth of data analysis, coding, testing, troubleshooting, automation, validation, and product integration, and working with a team of 14 people across four departments at SIG, the new Open Source Quality Model has been released in Sigrid® just a few days ago.

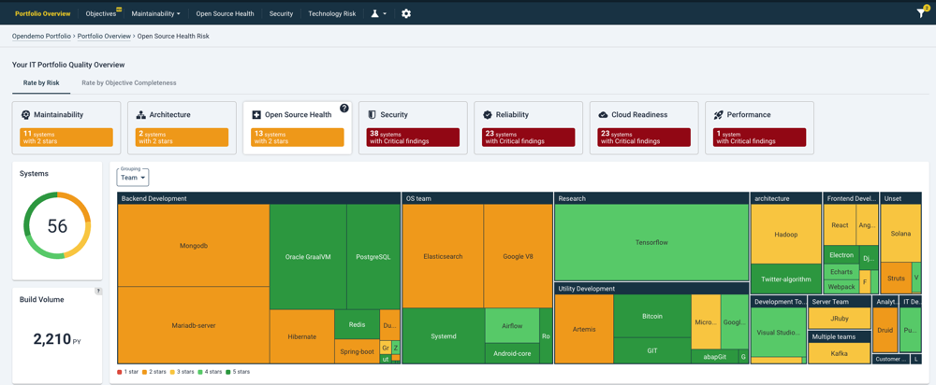

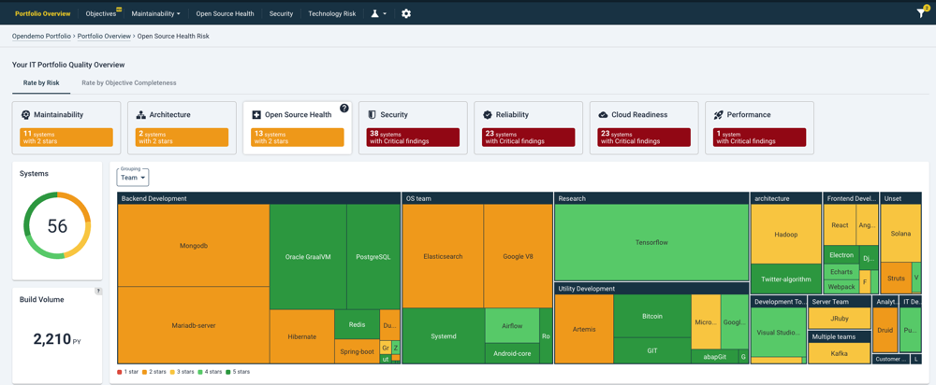

The new data-driven, benchmarked way of measuring and rating open-source dependencies is transparent, allows comparison with the market, all with being easy to understand for stakeholders.

The Model rates five of the six properties listed above from one to five stars: the stability metric is not rated and excluded from evaluations, as our analyses show not bringing enough value in our context. The Quality Model aggregates the results coming from the measurements to produce a system-wide rating. The results keep consistency between other benchmarked models, where a 3-star rating system is what could be defined 'market-average'. You can read more about this approach

here.

Customer feedback and model benefits

The initial response to the Model release has been quite reassuring: SIG consultants find the familiar rating approach invaluable to provide recommendations for key, business-critical systems in large portfolios.

Among our customers, early adopters comment exactly what we were aiming for: it looks way more insightful than the previous overview.

IT Managers can now see exactly where risks lie, as ratings allow comparison with the market, but also across the rest of their application portfolio. This enables decision-making opportunities that were just not possible before.

Sigrid portfolio overview for Open Source Health with benchmark-based measurements.

A common, interesting scenario we are witnessing is that of software systems belonging to the same portfolio and sharing their technology stack, for which Open Source Health ratings indicate dramatic differences.

One possible scenario could be the lack in governance and management of third-party open source dependencies, leaving to Software Teams the decision about managing their own.

To this end, SIG has also put down

Guidelines for healthy use of open source. In this extensive document we provide concrete and actionable guidelines on how to achieve healthy use of third party libraries and frameworks in your product. Where applicable, the guidelines indicate how to use the Open Source Quality Model in Sigrid to achieve this.

Conclusion and next steps

The release of Open Source Quality Model marks the third benchmarked-based approach to measure and rate software systems, after the

ISO25010 TÜVIT Maintainability and Architecture Quality. This also allows Sigrid to streamline and unify the way in which findings are reported, to the extent of providing a consistent message across the various capabilities.

Our commitment is pushing to improve the current state of the market, keep raising the bar for everyone to meet higher standards, for a healthier digital world.

As commonplace for Quality Models at SIG, the Open Source Health will also be recalibrated every year. This is to make sure the model stays current with market trends, relevant in the fast-paced digital era, and useful to our customers. As domain experts and risk-averse cohort, at SIG we would probably adopt even stricter guardrails, but we would fall again into the trap we wanted to get out of. Our quest is to keep striving for the perfect balance between risk evaluation and market comparison.

Where and how to find out more

How does your product usage of third-party dependency ranks against the industry and best-practices?

If you or your organization uses Sigrid®, the Open Source Health Quality model is already available for you to use. You can find more information about it in our public documentation

for systems and for

large portfolios.

On the other hand, if your organization would like to improve the quality of their software third-party open source dependencies, Sigrid® can support you in this process, click

here to speak to an expert.

[1] I. Heitlager, T. Kuipers and J. Visser, "A Practical Model for Measuring Maintainability," 6th International Conference on the Quality of Information and Communications Technology (QUATIC 2007), Lisbon, Portugal, 2007, pp. 30-39, doi: 10.1109/QUATIC.2007.8.

[2] T. L. Alves, C. Ypma and J. Visser, "Deriving metric thresholds from benchmark data," 2010 IEEE International Conference on Software Maintenance, Timisoara, Romania, 2010, pp. 1-10, doi: 10.1109/ICSM.2010.5609747.

[3] T. L. Alves, J. P. Correia and J. Visser, "Benchmark-Based Aggregation of Metrics to Ratings," 2011 Joint Conference of the 21st International Workshop on Software Measurement and the 6th International Conference on Software Process and Product Measurement, Nara, Japan, 2011, pp. 20-29, doi: 10.1109/IWSM-MENSURA.2011.15.

About Software Improvement Group

Software Improvement Group (SIG) leads in traditional and AI software quality assurance, empowering businesses and governments worldwide to drive success with reliable and robust IT systems. Sigrid® - its software excellence platform - analyzes the world's largest benchmark database of over 200 billion lines of code across more than 18,000 systems in 300+ technologies, and intelligently recommends the most crucial initiatives for organizations. SIG complies with multiple ISO/IEC standards, including ISO/IEC 27001 and 17025, and has co-developed ISO/IEC 5338, the new global standard for AI lifecycle management.

SIG was founded in 2000 and has offices in New York, Copenhagen, Brussels, and Frankfurt, and is headquartered in Amsterdam. Sigrid®, together with expert consultants, and nearly 25 years of industry-leading research, position SIG as the foremost authority on software excellence.