AI for executives: 4 actions the board should take to become AI ready

In this article

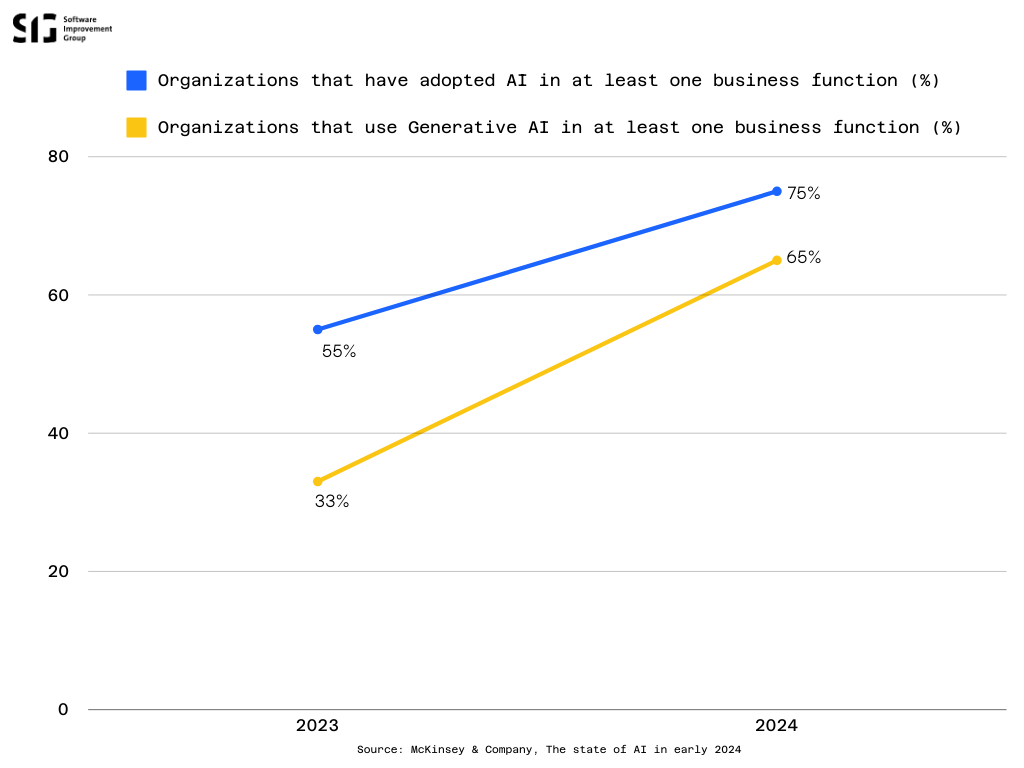

The results are in: 2024 is the year organizations truly began using and driving real business value from artificial intelligence (AI) While McKinsey’s 2023 AI survey reported that AI adoption hadn’t reached 66% in any region, by early 2024, 72% of businesses worldwide are using AI for at least one business function.

Despite AI’s potential for massive growth, it also introduces new risks and complexities. McKinsey found that 63% of businesses see generative AI as a “high” or “very high” priority, yet 91% admit they aren’t “very prepared” to implement it responsibly.

As a board member, you have a unique opportunity to help your organization benefit from AI. But with that opportunity comes the responsibility to ensure AI is used ethically, securely, and in compliance with evolving regulations. So, are you ready to lead your organization in this AI-driven future?

In this blog post, we’ll explore four practical steps you can take to ensure your organization is prepared to thrive in the AI era, based on insights from our newly released AI readiness guide.

Why the board must lead AI initiatives

Before diving into specific steps, it’s important to recognize why the board must lead AI initiatives in the first place.

AI offers transformative opportunities, but the inherent risks—ethical, regulatory, and operational—make board oversight essential. Board members are accountable for ensuring AI aligns with the organization’s long-term strategy and complies with legal standards.

Successful AI adoption isn’t just about using the latest technology—it’s about balancing innovation with responsible practices.

Step 1: Key AI topics for the board to know

As a board member, you don’t need to become an AI expert, but you do need to know enough about AI to make informed decisions. This means learning about AI’s potential, its limitations, and ethical considerations.

Let’s look at the key areas of AI knowledge—from AI basics to security risks—you need to grasp to steer your organization confidently into the AI era:

Learn the AI basics

In simple terms, AI refers to systems that analyze data to generate outputs—whether it’s a recommendation, prediction, or decision. Unlike traditional software, which operates based on predefined rules, AI learns from data to perform tasks autonomously.

While tools like ChatGPT make AI seem like the next big thing, its history actually stretches back to the 1950s. As Dr. Lars Ruddigkeit from Microsoft pointed out at our recent SCOPE 2024 conference, “The field was founded by Alan Turing, and his first publication was in 1950. We’ve been building on his research for decades to reach the breakthroughs we see today.”

Become aware of AI’s risks

Despite AI’s recent progress and incredible potential, it’s important to remember the technology doesn’t come without risks. Here are a few examples:

- Take cybersecurity threats, for example. In 2019, scammers used deepfake audio to impersonate a company executive, tricking employees into transferring €220,000.

- AI bias is also a concern. In 2018, Amazon had to scrap its AI recruitment tool after it was found to discriminate against female candidates.

- Additionally, misinformation is a growing issue. Models like ChatGPT and Google Bard have been known to confidently generate false information, including entirely fabricated legal citations or news stories.

These are just a few examples of the risks that come with AI. It’s important to remember that while AI technology can be mind-blowingly impressive, organizations shouldn’t be blinded by the possibilities it offers, but instead, remain vigilant and also consider the risks it brings.

Keep up with AI laws and regulations

Thankfully, as AI continues to advance, regulations are quickly evolving to keep up. Staying on top of legal frameworks like the EU’s AI Act and emerging US legislation is crucial for ensuring that your organization uses AI responsibly and complies with the law.

However, during his keynote speech at SCOPE 2024, Rob van der Veer added an important side note:

“Don’t be blinded by just the risks in the AI Act, these are about harm to individuals and society, super important, but it doesn’t talk about the harm to your own business. Include that in your risk analysis as well.” – Rob van der Veer, Senior principal expert AI at Software Improvement Group.

A great starting point for your business to align its AI initiatives with best practices is to get familiar with relevant ISO standards.

What is ISO?

ISO stands for The International Organization for Standardization, and it’s one of the world’s oldest non-governmental organizations, bringing global experts together to establish the best way of doing things—from making a product to managing a process.

Standards like ISO/IEC 5338, co-developed by SIG, provide best practices that can help your organization manage AI risks effectively while staying competitive in a fast-changing landscape.

Be aware of AI impacts

AI doesn’t just affect operations—it can change the way employees work, how customers interact with your business, and even influence societal outcomes.

Being aware of both the benefits and ethical concerns, like privacy or bias, will help you make well-rounded decisions that balance innovation with responsibility.

Mitigating AI security risks begins with awareness

AI introduces unique security risks, from data privacy concerns to model manipulation and cybersecurity threats.

In fact, according to Deloitte, 65% of business leaders expressed concerns about the cybersecurity threats posed by AI, while 64% remain uncertain about how AI handles confidentiality and data protection.

Boards should be aware of the basic security measures required to safeguard AI systems, such as minimizing data collection and establishing strong oversight and validation processes.

Step 2: Assign AI roles and responsibilities

The board plays a critical role in ensuring the effective governance of AI within the organization, and this starts by assigning clear roles and responsibilities.

Define key AI responsibilities

One of the first steps is to establish an AI committee that includes board members, legal experts, and technical AI specialists. This team will be responsible for overseeing AI governance, ensuring security, maintaining compliance with regulations, and addressing ethical considerations.

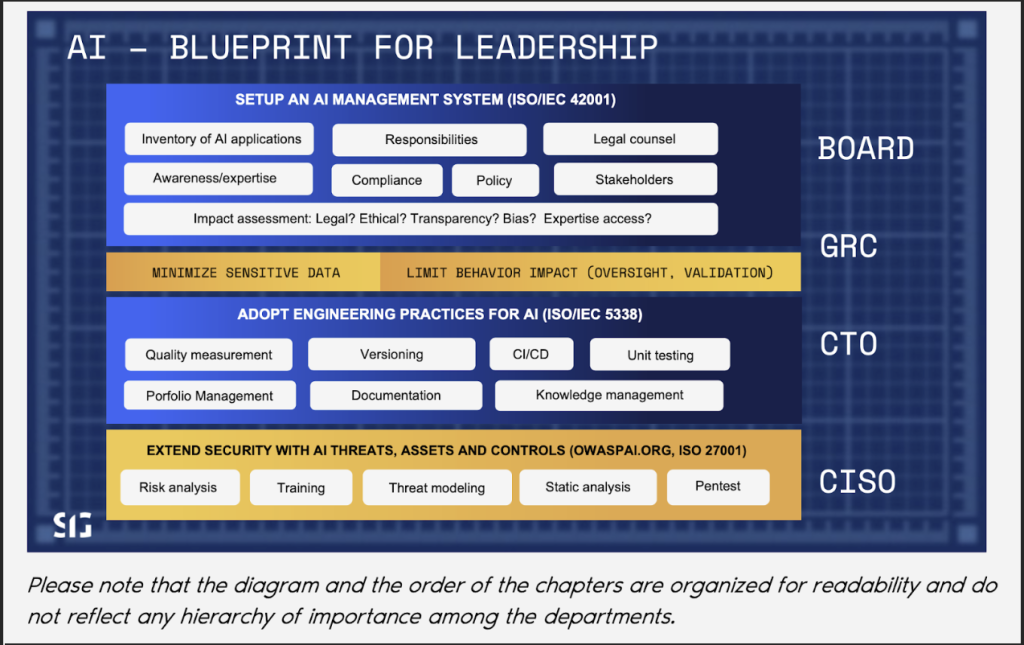

Take a look at the diagram below for an example overview of responsibilities per business function.

Establish an AI management system

The board should also implement an AI management system to oversee AI risks and compliance, much like how information security is managed.

As Rob van der Veer, SIG’s AI expert, stressed at our recent SCOPE 2024 conference, “You really need to know where you are using AI, where you are building AI in order to control it. So you need an AI management system, just like you can have an Information Security Management System. It has very similar characteristics. You can use the ISO 42001 for this.”

Step 3: Build on existing frameworks

When implementing AI, there’s no need to reinvent the wheel. Instead of creating new processes, the board should focus on integrating AI governance into existing organizational frameworks, such as risk management, compliance, and security systems.

By leveraging what’s already in place, you can manage AI risks efficiently while ensuring compliance and scalability.

Leverage existing systems

AI should be treated like any other part of your organization’s software landscape. This means applying your current frameworks—like version control, data governance, and compliance systems—to AI initiatives.

As Rob van der Veer emphasized, “We’re seeing AI in isolation too much… just build on your existing systems.” This approach ensures that AI becomes a seamless part of your organization rather than an isolated effort.

Set up an AI committee

To oversee this integration, the board should consider establishing a cross-functional AI committee. This committee—comprising members from legal, compliance, security, and AI development teams—can manage AI risks, ensure compliance, and uphold governance standards.

With the right people in place, your organization can leverage existing processes while maintaining control over AI’s unique challenges.

Step 4: Implement AI policies and training

AI is transforming business at a rapid pace, and the board must take an active role in ensuring the organization is ready to meet the challenges and opportunities it brings. This includes setting clear AI policies and investing in continuous training to equip board members and employees with the necessary skills.

Develop and implement AI policies

The board should oversee the creation of AI policies that manage risks and ensure compliance with both internal goals and external regulations.

Rather than starting from scratch, it’s more efficient to adapt existing policies, such as those in your Information Security Management System (ISMS). These policies should provide clear guidelines on how AI can and cannot be used and should be regularly reviewed to keep pace with evolving AI laws and standards.

Upskill the board and employees

To keep up with AI advancements, the board should ensure ongoing AI education across the organization. This includes basic AI literacy for all employees and advanced training for key technical roles.

This might be more urgent than you may think.

According to article 4, of the EU AI Act as of February 2nd, 2025, organizations operating in the European market must ensure adequate AI literacy among employees involved in the use and deployment of AI systems.

Next to compliance, integrating AI into existing training programs and creating a community of practice—where teams share knowledge and best practices—will encourage collaboration and build internal expertise. It’s also important to tap into external resources, such as partnering with AI experts or legal counsel, to stay ahead of industry trends.

The board’s role in AI success

As we can see above, the board plays a crucial role in guiding AI initiatives, ensuring the right balance between seizing opportunities and managing risks. This means staying accountable for AI’s ethical use, maintaining compliance, and aligning AI projects with the broader business strategy.

But successful AI adoption doesn’t stop there—it requires continuous oversight, adapting policies as AI evolves and new regulations come into play.

To help your organization lead the way in AI, our AI Readiness Guide, written by SIG’s AI expert Rob van der Veer, provides a practical, step-by-step approach. With 19 actionable steps covering governance, risk, development, and security, this guide is designed for board members and leaders ready to take charge of AI.

Download the free guide and get started on your journey to seamless AI integration today.