11 October 2024

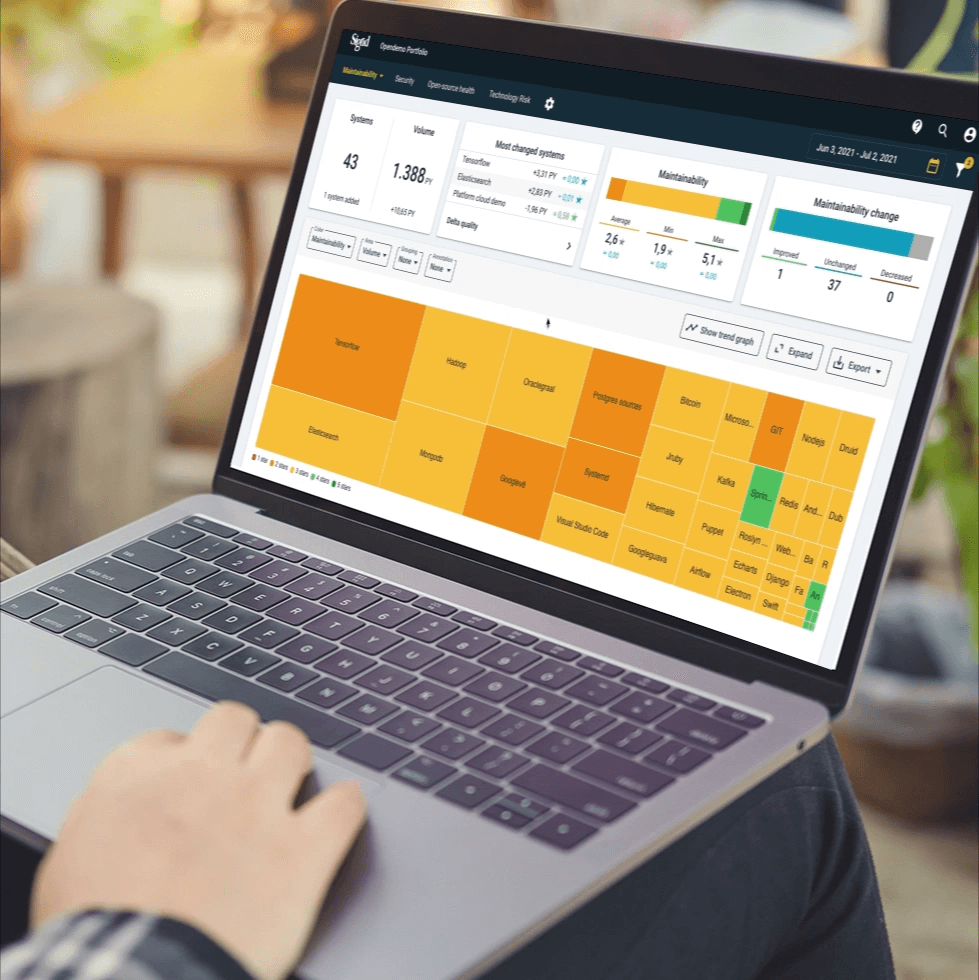

Request your demo of the Sigrid® | Software Assurance Platform:

7 min read

Written by: Software Improvement Group

The rapid adoption of artificial intelligence (AI) is transforming industries at an incredible pace. In fact, 77% of companies are already using or exploring AI, making it the fastest-adopted technology in history.

But despite AI’s rise in popularity, many businesses still struggle with integrating it smoothly into their existing systems.

Too often, businesses assume they need to create entirely new processes for AI, which can result in isolated systems, confusion, duplication, and inefficiencies. At our recent SCOPE 2024 conference, SIG’s AI expert, Rob van der Veer, mentioned “We’re seeing AI in isolation too much.”

It’s important to treat AI for what it is, Software with unique characteristics.

With that in mind, the implementation of AI becomes a lot less daunting. So, instead of starting from scratch, organizations can build on their existing governance, risk, compliance, and security frameworks.

In this blog post, we’ll discuss how your business can successfully adopt AI by utilizing your existing frameworks. To expand on what’s already established, rather than creating separate processes for AI.

When integrating AI, many organizations fall into the trap of thinking they need to overhaul their systems entirely. But the key to successful AI adoption is often extending existing frameworks rather than reinventing the wheel.

As Rob explained: “We’re working with clients who are transitioning AI from the lab into the real world, where it suddenly needs to perform and be scalable, documented, transferable, maintainable, secure, legal.”

The reality is, building on your current structures can provide a smoother, more efficient path to AI integration.

To successfully integrate AI into existing processes, it should be treated as part of your overall software landscape—not as something separate.

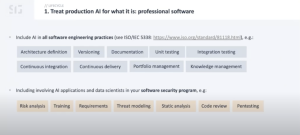

“Treat production AI for what it is: professional software,” explained Rob. “Make sure you involve all your AI engineering activities in your regular software engineering program, like your data scientists, apply versioning to the models, and to their data, let them document their experiments, etc.”

As highlighted in ISO/IEC 5338, the new global standard for AI lifecycle management co-developed by SIG, AI systems should be viewed and managed just like traditional software—only with some unique characteristics.

These include things like the tendency for models to become stale over time, necessitating regular retraining, or the fact that AI behavior is driven by data, making that data both a source of opportunity and risk.

Instead of creating new processes, organizations should extend their existing software lifecycle frameworks, allowing them to benefit from established practices, processes, and tools. ISO/IEC 5338 is not the only standard that is relevant to AI implementation, we’ve written a detailed article for you to read here.

Software documentation serves as a resource for everyone involved in the creation, deployment, and use of a software application. It guides the development process while also providing essential information for tasks like installation and troubleshooting.

Just like in regular software development, proper documentation is also crucial for AI projects and includes the following key practices:

These practices help prevent AI projects from becoming isolated, undocumented, or challenging to maintain over time.

To maximize the potential of AI and avoid inefficiencies, it’s critical to prevent AI from becoming isolated from the rest of your organization. Treating AI separately can create silos—areas where AI functions independently of other systems and teams—leading to duplication, inefficiencies, and increased risks.

Let’s take a look at some key ways to avoid silos when implementing AI in your organization:

It’s crucial to integrate AI into existing systems rather than developing entirely new, isolated processes. AI should be viewed as an extension of your current software and security systems.

This means AI models and assets should be governed by the same security, compliance, and risk management frameworks that apply to traditional IT systems.

Below is an overview of the existing practices your organization should incorporate AI into:

Creating separate governance or security structures specifically for AI can lead to fragmentation, making it difficult to manage AI risks consistently across the organization.

When AI systems are isolated, there is a higher chance of duplicating efforts related to security, compliance, and governance, which can add complexity and operational risk.

Siloed AI initiatives may also limit opportunities for cross-functional collaboration. By separating AI teams from traditional software and security teams, you risk missing out on shared insights and best practices that could strengthen both AI and traditional systems.

Organizations should also build upon current practices like risk assessments, security policies, version control, and data governance.

By applying these existing standards to AI systems, businesses can ensure that AI isn’t treated as a disconnected entity, but rather as an integrated part of the organization’s overall IT and business strategy.

Encouraging cross-functional collaboration helps to prevent isolation of AI. AI engineers and traditional software engineers should work together to ensure AI-specific assets, threats, and controls are integrated into the business’s overall strategy.

By involving AI teams in processes like threat modeling, platform development, and knowledge sharing, companies can ensure AI developments align with broader IT and security strategies. They can also tap into existing expertise across departments, creating a more unified approach to AI and software development.

When incorporating AI into your existing security processes, it’s also essential to build on your current security measures. This means adapting traditional security practices, like encryption and access control, to address the unique challenges AI presents.

AI systems should be treated like any other software, so basic security measures still apply. This includes:

AI brings unique risks that require some tweaks to traditional security measures. For instance:

AI systems depend heavily on external data, which introduces additional security requirements. This includes:

By adapting your current security framework to include these AI-specific considerations, you can effectively manage the risks while leveraging the benefits of AI.

There’s no denying that AI introduces unique risks, but these risks can and should be managed with existing risk management frameworks, without creating separate systems or processes.

Let’s take a look at how you can tackle AI-specific risks like deepfakes and data poisoning by integrating them within your current risk management structures.

As AI technology becomes more common, it brings along some new security challenges that businesses need to be aware of. Understanding these threats is vital for keeping systems and data safe. Let’s take a look at some key examples:

These are AI-generated videos or audio that can be manipulated to look real. For instance, in 2019, criminals used AI software to impersonate the voice of a company executive, tricking a firm into transferring €220,000 to a scammer.

This happens when bad actors feed false information into AI systems, causing them to behave unpredictably.

For instance, in 2018, the security research team Tencent Keen Security Lab demonstrated how they could remotely compromise the Autopilot system on a Tesla Model S by manipulating road signs.

By recognizing these AI-specific threats and treating them like traditional risks, companies can better integrate them into their existing risk management processes, helping to protect their systems and data more effectively.

Rather than maintaining separate AI risk tracking systems, incorporate AI risks into existing risk registers. This creates a unified risk management approach and aligns AI risks with broader risk management strategies.

As we’ve learnt above, implementing AI doesn’t mean doing everything differently. By building on what’s already there, organizations can ensure a smooth, secure, and compliant transition into the AI era.

If you’re ready to integrate AI into your organization, it doesn’t have to be as daunting as you may think

Our brand new AI Readiness Guide written by our very own Rob van der Veer, AI expert and author of AI standards including ISO/IEC 5338 and the EU AI Act security standard, offers a step-by-step approach to help you do just that.

With 19 actionable steps covering governance, risk, development, and security, it’s tailored for board members, executives, and IT leaders looking to adopt AI without the headaches.

Download the free guide and start your journey toward seamless AI integration today.

Author:

We'll keep you posted on the latest news, events, and publications.