11 October 2024

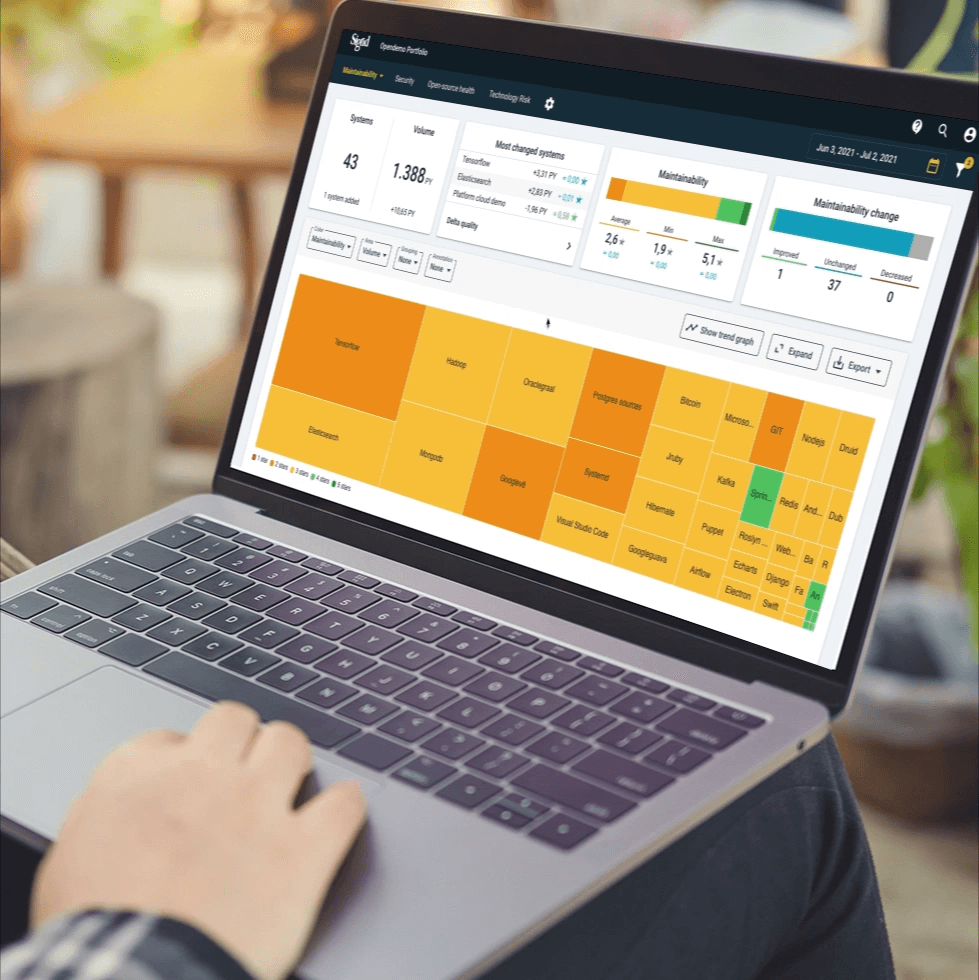

Request your demo of the Sigrid® | Software Assurance Platform:

5 min read

Written by: Software Improvement Group

Artificial intelligence (AI) is weaving its way into the business world, offering huge opportunities for innovation and growth.

In fact, 60% of CIOs already included AI in their innovation plans, and Gartner predicts that by 2026, over 80% of enterprises will be using GenAI APIs or apps. Moreover, 75% of newly developed enterprise applications are expected to incorporate AI or machine learning models—up from just 5% in 2023.

But with this rapid adoption comes growing risks. According to the World Economic Forum, 43% of executives believe we should hit pause or slow down AI development until its potential impacts are better understood.

At our recent SCOPE 2024 conference, SIG’s AI expert Rob van der Veer shared his thoughts about the double-edged nature of AI. “I think you guys can agree with me when I say that the effects of, and the opportunities of AI are profound,” with some companies reporting up to 40% gains in productivity and efficiency in software development.

But he also raised concerns about what happens when things go wrong. “What if an AI model makes a mistake and sends my browser history to all my friends? Or what if it’s hacked?”

Rob summed it up well: “I think it’s important that we have these two different aspects and alternate between them: the “blue sky” future and the “burning earth” future, if you will.

With AI development outpacing companies’ ability to manage its risks, the question is no longer whether AI will transform business, but how businesses can effectively manage AI’s impact without losing control.

In this blog post, we’ll explore both the opportunities and challenges AI presents. We’ll also discuss the impact of legislation and standards and share some practical AI readiness tips to help leaders navigate this tricky terrain.

With 77% of companies using or exploring AI in their businesses, this powerful technology is reshaping industries across the board.

From enhancing data analysis and decision-making to optimizing sales, marketing, and cybersecurity, AI’s reach is profound. As these technologies continue to evolve, new use cases are constantly emerging, further transforming how organizations operate.

Take healthcare, for example. AI is being used to streamline patient care through predictive analytics and personalized treatment plans, helping doctors make faster, more accurate diagnoses.

Last year, AtlantiCare implemented AI-powered pre-operative assessment tools and advanced robotic surgical techniques to enhance the early detection and treatment of lung cancer.

In finance, AI-powered tools are improving fraud detection, automating risk assessments, and optimizing trading strategies. Google Cloud, for example, helps businesses identify anomalies such as fraudulent transactions, financial crimes, spoofing in trades, and emerging cyber threats.

Meanwhile, logistics companies are adopting AI to streamline supply chains, forecast demand, and manage inventory in real-time. AI can even optimize routes using data from traffic sensors, GPS tracking, and weather forecasts.

AI’s ability to mimic human decision-making and problem-solving is unlocking new levels of efficiency across industries, making it a must-have for businesses looking to stay competitive. And as adoption grows, the impact will only deepen.

Despite AI’s recent progress making the technology seem new, its history stretches back to the 1950s–and it hasn’t always been smooth sailing. AI has faced many setbacks over the years, from underestimating its complexity to over-promising what can be delivered combined with unrealistic user expectations.

While AI, no doubt, holds incredible potential, it’s important to remember that it’s not a silver bullet for business challenges. It can’t intuitively figure out how to integrate itself into your processes, nor will it automatically solve every issue. The real power of AI lies in how businesses understand and apply it—but with that power comes significant risks.

One major concern for businesses is reputational damage. A 2023 World Economic Forum study found that 75% of Chief Risk Officers (CROs) are worried about the impact AI, especially generative AI, could have on their company’s image.

Cybersecurity is another pressing issue. According to Deloitte, 65% of business leaders expressed concerns about the cybersecurity threats posed by AI, while 64% remain uncertain about how AI handles confidentiality and data protection.

Generative AI also raises alarms about the spread of misinformation, with over half of CEOs fearing its potential to increase internal misinformation and bias within their organizations.

Another critical challenge is the widespread lack of understanding of AI technology. Many organizations struggle with the technical intricacies of AI, especially in how data is collected, stored, and used. Without a proper understanding, businesses are vulnerable to errors, security risks, and poor decision-making.

Bias in AI systems is also a growing challenge. As AI is only as good as the data it’s trained on, biased data can lead to unfair outcomes, especially in hiring or customer service.

Adding to this is the lack of transparency and accountability; many AI applications lack clear verifiability, and it’s often unclear who should be held accountable when AI makes a mistake. Gartner predicted that 85% of AI projects in 2022 would deliver flawed results due to bias or mismanagement, highlighting the importance of accuracy and proper oversight.

Finally, intellectual property (IP) issues are becoming increasingly complex as AI heavily relies on data. Recent lawsuits from eight US newspapers against OpenAI and Microsoft over copyright infringement highlights the challenges surrounding IP ownership in the AI space.

While many are excited about AI’s potential, there’s still a lot of work needed to make sure it’s used safely. Fortunately, many organizations agree that stronger regulations are necessary to guide its development and use.

In response to growing risks, governments worldwide are taking action to regulate AI.

Many countries are developing laws to keep up with AI’s rapid growth and varied applications. According to the Global AI Legislation Tracker, these efforts range from comprehensive legislation to specific use-case regulations and voluntary guidelines.

Stanford University reported a sharp rise in the number of countries with AI-related laws, jumping from 25 in 2022 to 127 in 2023.

While regions like the EU are advancing their own frameworks with the EU AI Act, multilateral collaboration is also increasing.

The OECD’s AI Principles are helping guide countries in developing effective AI policies while promoting global interoperability. These principles are now part of regulatory frameworks across the EU and US and are used by the United Nations and G7 leaders—who are working to balance AI’s potential risks with its benefits.

The International Organization for Standardization (ISO) also plays a vital role in these global AI regulation efforts. Established in 1946, ISO brings together experts from around the world to develop international standards that ensure safety, efficiency, and quality across industries.

Compliance with ISO standards, such as ISO 5338—the global standard for developing AI systems co-authored by SIG’s Senior Director and AI expert Rob van der Veer—holds particular value for businesses implementing AI. It demonstrates a commitment to best practices and regulatory compliance, helping businesses enhance their reputation and gain global recognition for quality in their AI processes.

For businesses adopting AI, understanding these regulatory frameworks and standards like ISO is crucial for ensuring safe, ethical, and effective AI integration. We’ve outlined the key elements of the EU AI Act, US AI legislation, and relevant ISO standards to help organizations align with the latest regulations and best practices.

As we’ve seen above, AI offers immense opportunities, but it also brings with it significant challenges. To leverage AI’s full potential while minimizing risks, organizations need to adopt responsible practices and strong frameworks.

To help you get there, our comprehensive AI readiness guide outlines 19 actionable steps across governance, risk, development, and security—designed for board members, executives, and IT leaders.

Here are a few key takeaways:

Ready to make the most of AI in your organization? Stay tuned for our AI readiness guide coming October 15th 2024.

Author:

We'll keep you posted on the latest news, events, and publications.