11 October 2024

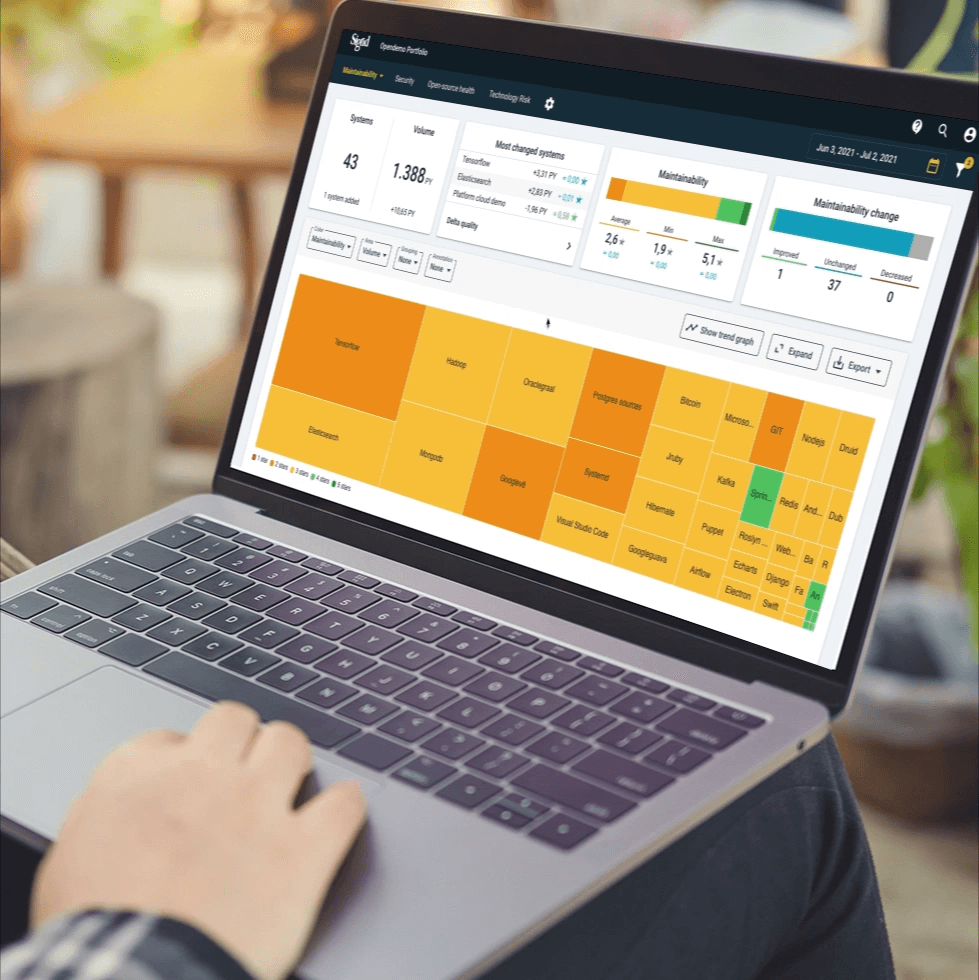

Request your demo of the Sigrid® | Software Assurance Platform:

7 min read

Written by: Software Improvement Group

In tandem with the evolution of AI legislation elsewhere in the world, the USA has been introducing a variety of AI bills, acts, and guiding principles over the past few years—both at the federal and state level.

The National Artificial Intelligence Initiative Act of 2020 promotes and subsidizes AI innovation efforts across key federal agencies.

The Blueprint for an AI Bill of Rights, introduced in 2022, introduces a set of guiding, non-binding principles for the safe and secure development of AI.

State AI legislation like the Colorado AI Act of 2024 takes inspiration from earlier US AI legislation and the EU AI Act, drafting a more comprehensive set of legally binding laws governing safe, secure, and transparent AI implementation in business.

Compliance with these pieces of US AI legislation will be key to avoiding financial penalties, maximizing the benefits of AI in business, minimizing its risks, and ensuring the proper safe, secure, and trustworthy development and deployment of new AI systems.

Artificial Intelligence was once the stuff of science fiction, but at time of writing, it is the fastest adopted business technology in history. Today, a quarter of all US businesses are integrating AI into their operations and a further 43% considering AI implementation soon.

While the potential benefits of AI for business, society, healthcare, transport, and culture are significant, these advantages are overshadowed by real risks, including security breaches, misinformation, and flawed decision-making processes.

As with any emerging technology, AI’s rapid evolution has outpaced regulatory frameworks. However, the introduction of the European Union’s EU AI Act on August 1, 2024—the world’s first comprehensive AI law—marks a turning point in the legal landscape. While the EU leads in AI regulation, other countries are also developing their own frameworks.

As individual regions like the EU continue to develop their own frameworks for AI legislation, multilateral coordination is also on the rise. For example, the ISO has published a number of standards to benefit businesses adopting AI, whilst the Organization for Economic Co-operation and Development released a similar series of AI principles.

The number of discussions about AI taking place within the United Nations and G7 is increasing, too, with emphasis on balancing AI’s potential risks against the many benefits it offers.

This article serves as a general an up-to-date overview of AI legislation in the US, so that business leaders in America and beyond can better prepare for compliance, whilst baking into their operations safer, more secure, more trustworthy AI use.

In the United States, the complexity of federalism has made it challenging to implement a unified AI policy. Currently, there is no overarching AI Act. The closest initiative is President Joe Biden’s executive order (EO) on the ‘Safe, Secure, and Trustworthy Development and Use of AI,’ issued on October 30, 2023.

AI regulation in the U.S. consists of various state and federal bills, often addressing only specific aspects, such as the California Consumer Privacy Act, which governs AI in automated decision-making. In other words, America’s AI policy is more akin to a jigsaw puzzle of individual approaches and narrow legislation than it is a centralized strategy. Until a comprehensive AI Act is passed in the US, businesses operating in or with the country will need to be extra vigilant regarding compliance.

By studying the following key legislative measures and principles, organizations can better ensure their AI systems are safe, fair, and compliant with emerging US regulations.

The National Artificial Intelligence Initiative Act of 2020, introduced under the Trump administration, was one of the first major national efforts specifically targeting artificial intelligence. However, its primary focus is less on regulating AI and more on fostering research and development in the field. The Act aims to solidify the United States’ position as a global leader in AI innovation.

The primary purpose of the 2020 act is to guide AI research, development, and evaluation at various federal science agencies, to drive American R&D into AI technology and champion AI use in government. The Trump-era Act advocated for “a more hands-off, free market–oriented political philosophy and the perceived geopolitical imperative of “leading” in AI.”

The American AI Initiative’s central impact on business in the US has been the coordination of AI activities across different federal agencies. Below we list the main agencies affected and their directions, with emphasis on those affecting business across the country.

Building on the Trump-era initiatives, the Biden administration introduced the Blueprint for an AI Bill of Rights in October 2022. This proposal sought to establish core principles to guide how federal agencies and other entities could approach AI.

A legal disclaimer at the top of the document states that it is not legally binding. Instead, it serves as a voluntary framework that agencies, independent organizations, and businesses can choose to follow. In essence, the Blueprint is not official U.S. policy but, as its name suggests, a forward-looking guide for the future of AI.

The driving mantra of the AI Bill of Rights Blueprint is to make “automated systems work for the American people.” The Blueprint seeks to achieve this by establishing five key principles for a future, legally binding AI Act.

We have just examined the two most significant nationwide legislation-related efforts concerning artificial intelligence in the U.S. and found that while one is not legally binding, the other primarily focuses on innovation rather than regulation.

Given America’s unique political landscape and the historical reluctance of the White House to impose heavily on state autonomy, it is at the state level that AI legislation may offer business leaders a clearer vision of what a future U.S. AI Act could entail.

Several states, led by Colorado, Maryland and California, have already passed AI-related laws to further regulate AI use. We’ll take a look specifically at Colorado’s state legislation, as it is the most comprehensive.

The Colorado AI Act has arguably established the foundational framework for a comprehensive US AI Act, borrowing several elements from the EU AI Act.

As the first comprehensive AI legislation in the US, the Colorado AI Act adopts a risk-based approach to AI—similar to the EU’s recent AI act—primarily targeting the developers and deployers of high-risk AI systems.

Business leaders in IT and other sectors can prepare for compliance with the Colorado AI Act and other acts which may follow in its footsteps by:

As one of the wealthiest and most powerful nations in the world, the U.S. is understandably expected to align its AI legislation with the approaches taken by other leading entities. Allowing the technology to go unregulated could expose businesses across the nation to significant risks.

And yet, so far, Congress’s approach to AI legislation has been to avoid straying into the territory of regulating AI in business—i.e., the private sector—and instead to champion America’s status as a leader in AI R&D and governmental AI deployment. In part, this is thanks to a conflicting approach to AI law taken by the current and former Biden and Trump administrations.

But as AI use in business inevitably continues to expand and the risks associated begin to weigh on business leadership, the challenge for US governance will be to develop a comprehensive, nation-wide AI Act.

More challenging even still is to develop an Act which clearly defines AI, measures and categorizes its risks, accounts for its application across all sectors, establishes clear strategies for risk mitigation whilst preserving its benefits, and to do all this whilst gathering bi-partisan support for the Act so that it might pass Congress—all at a time when the future of US politics is deeply uncertain.

Though legislation on AI in the US is piecemeal, businesses must still take note of the current, emerging, and potential future regulations discussed in this article.

The careful regulation, measurement, assessment, and risk mitigation of AI—as promoted by the USA’s mosaic of AI related bills, acts, and principles—can actively help business leaders to develop and deploy AI safely, securely, and in a manner which fosters trust among stakeholders.

Moreover, the penalties for AI non-compliance in the US can be hefty.

At present, whilst there is no comprehensive federal Act governing AI use and risk mitigation, there is still a range of laws regulating AI which, if non-compliance is triggered, can result in severe financial penalties, such as in the following examples:

Current US legislation around AI either emphasizes AI as a tool for the country’s economic growth and continued innovation in the field, or AI risk mitigation.

The future of U.S. legislation concerning the integration of AI in business could depend on the outcome of the upcoming 2024 presidential election.

If we would paint with broad brush strokes, we could say that if Donald Trump is reelected, it is likely that U.S. AI legislation will remain less stringent than in other countries, prioritizing investment in AI research and development. Conversely, if Harris wins, she may seek to build upon the Biden administration’s focus on AI safety, security, and trustworthiness.

In July of 2024, Trump mentioned the following during the Republican National Convention

“We will repeal Joe Biden’s dangerous Executive Order that hinders AI Innovation and imposes Radical Leftwing ideas on the development of this technology, in its place, Republicans support AI Development rooted in Free Speech and Human Flourishing.” – Donald Trump, during the Republican National Convention

However, Aaron Cooper, senior vice president of global policy for BSA The Software Alliance, highlights that there are a lot of similarities between how the Trump and Biden administrations approached AI policy.

Voters haven’t yet heard much detail about how a Harris, or a second Trump administration would change AI legislation developments.

“What we’ll continue to see as the technology develops and as new issues arise, regardless of who’s in the White House, they’ll be looking at how we can unleash the most good from AI while reducing the most harm, that sounds obvious, but it’s not an easy calculation.” – Aaron Cooper, Senior Vice President of global policy for BSA The Software Alliance

AI legislation in the US differs significantly from that in other parts of the world. So far, the focus has primarily been on innovation, government AI use, and reinforcing “traditional American values.” However, the introduction of comprehensive state-level laws, such as the Colorado AI Act, is beginning to shift this landscape.

For organizations operating within (and with) the US, navigating the myriads of AI bills, acts, and proposals can be challenging. Nevertheless, it’s essential to keep an eye on what is needed for US AI compliance.

Not only will compliance help companies avoid the often-severe penalties for non-compliance, but it also helps maximize the benefits of AI use whilst minimizing its risks.

Readers are encouraged to explore different AI strategies, reassess their current stance on AI in the context of national and international compliance, and align themselves with current and future US AI regulations.

Learn more about AI use and regulation in business by exploring the Software Improvement Group blog

Author:

We'll keep you posted on the latest news, events, and publications.